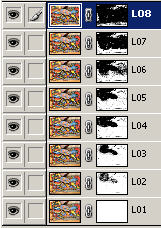

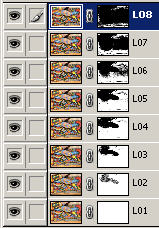

mN mask type

m0 hard-edged masks,

mutually exclusive

m1 hard-edged masks, stack

of nested masks

m2 blended masks, stack of

nested masks

(m2 is default & strongly

recommended --

this option includes a

smoothing computation that seems to help a lot.)

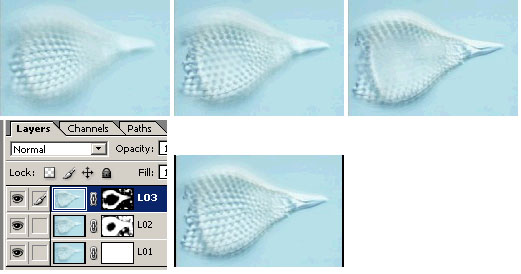

fN focus estimation window size, N = halfwidth of window.

Recommended value is

0.5% of image width, e.g. 4 pixels for an 800-pixel image.

Computation cost for focus estimation increases

proportional to N^2. Default

f4.

sN smoothing window size, N = halfwidth of window.

Recommended value is 0.5% of

image width, e.g. 4 pixels for an 800-pixel image.

Computation cost for smoothing increases

proportional to N^2. Default

s4.

The

seven source images for this montage cannot be registered as

perfectly as in our first two examples. Due to natural movement

of the

flower stem, the flower rotates slightly between shots and also shifts

across the background. Here are all seven frames, before and

after

registration, animated as a film loop.

The

seven source images for this montage cannot be registered as

perfectly as in our first two examples. Due to natural movement

of the

flower stem, the flower rotates slightly between shots and also shifts

across the background. Here are all seven frames, before and

after

registration, animated as a film loop.